Shipping code quickly with confidence: Synthetic Testing

Every engineer wants to ship high-quality software systems, but the “how” isn’t always straightforward. To help, we designed a testing series, “Shipping code quickly with confidence.” Using code from sendsecure.ly, a Basis Theory lab project, readers will bring together the testing layers and strategies used by our data tokenization platform to achieve its 0% critical and major defect rate. You can find the links to all published articles at the bottom of each blog.

Welcome to the fourth chapter in our testing series! So far, we’ve covered the testing fundamentals we practice at Basis Theory and our approach to API and UI Acceptance and Integration Testing. Let’s increase our scope a bit and gain confidence in the health of our production environment with Synthetic Testing.

What Are Synthetic Tests?

Instead of having users find problems with your production environment, you can use machines that will find those problems before any real users encounter them. Synthetic testing, or synthetic monitoring, simulates user behavior and runs external to your application and hosting solution, ensuring your app works in your deployed environment. These tests give you considerably higher confidence in the stability of your systems and may even be considered crucial for maintenance.

What do Synthetic Tests cover?

Your entire tech stack gets tested thoroughly with these kinds of tests. When a test is executed, it starts external to your system and appears as a real user interacting with the system. In doing so, synthetic tests check your app’s main functionality, the infrastructure that’s hosting it, and all of its dependencies. However, unlike integration tests, which test whether those dependencies are behaving as expected, these tests focus on the health of your app.

Synthetic Test Feedback Loops

Synthetic tests run in production, meaning any issues they discover are found very late in the software development life cycle and therefore are more expensive to address. For this reason, we use Synthetic tests to test the health of our systems, not to find bugs.

Choosing a Synthetic Testing Service

Synthetic testing services offer numerous features, making some more attractive than others depending on your use case. Here are some features that we found to be particularly helpful to us:

- API testing

- Browser testing

- Historical data of previous runs

- Alerting integrations with PagerDuty

- Multi-region testing

- Synthetic tests as code

- Exportable metrics for test runs

To find the right tool for your use case, we suggest you clearly define the problem you’re looking to solve with synthetic testing and the absolute constraints of that problem. Here are a few questions that might help you find the right solution:

- What parts of your system do you need to ensure are healthy? An API? A web application?

- Which types of jobs do you need your notifications to perform? (e.g. PagerDuty has many integrations that allow us to customize workflows to fit our needs)

- How much money are you willing to spend on this service?

We chose DataDog to run Synthetic Tests at Basis Theory, but, again, what works for us may not make sense for you and your team.

How to write Synthetic Tests

As I’m writing this, we currently have no assurances that sendsecure.ly is healthy and serving requests in our deployed environment. So let’s add some tests to ensure its availability and that the critical paths function as expected. For this, we’ll write some API and UI Synthetic Tests. We’ll be using DataDog’s Synthetic Testing service and configuring much of it via Pulumi in Typescript. All the code used in this post is available here.

Health check tests

We check our app’s health using an endpoint that runs light checks against any dependencies and returns a successful status if the application itself is up and running.

The code below will provision a Synthetic Test in DataDog using Pulumi. We are making a GET request to our health endpoint and expecting a 200 response within 5 seconds. We’re also defining that this check runs every 5 minutes from 4 locations, mainly in the US.

// health check

const healthCheckName = 'sendsecurely-health-check';

const sendSecurelyHealthCheck = new datadog.SyntheticsTest(healthCheckName, {

name: healthCheckName,

status: 'live',

type: 'api',

subtype: 'http',

requestDefinition: {

method: 'GET',

url: 'https://sendsecure.ly/api/healthz',

timeout: 5,

},

assertions: [

{

type: 'statusCode',

operator: 'is',

target: '200',

},

],

locations: [

'aws:sa-east-1',

'aws:us-east-2',

'aws:us-west-2',

'aws:us-west-1',

],

optionsList: {

followRedirects: true,

minLocationFailed: 2,

monitorName: healthCheckName,

monitorPriority: 2,

tickEvery: 300,

…

},

…

});

When to run Synthetic Tests

There are primarily two different options for choosing when to run Synthetic Tests: on an interval and an event. Each have some considerations to keep in mind.

When it comes to intervals, which conduct tests on a predetermined schedule, consider how long you’re willing to endure faults in your system against the frequency of possible alerts. So, for example, you don’t want to unnecessarily burden your system or generate notifications that alert on every network outage blip.

As for event triggers, which can run each time you or your team push a change to production, be mindful that these won’t check external dependencies. Relying solely on this strategy could mean missing breaking changes from third party providers.

As you see in the code, we run our health check tests on a high frequency interval, and our functional checks (API and browser tests) on a lower frequency interval.

Where to run Synthetic Tests

Which part of the world is most critical to your business? This answer will guide you in deciding where geographically to run your checks. Additionally, you can check the latency of your requests from different parts of the world, providing valuable insights into how users from various regions experience your application.

API tests

In this test, we ensure the system functions by walking through the multiple endpoints exposed by sendsecure.ly. First, we create a secret, then get the details of that secret, and finally read the secret itself. We expect a 200 response from each step.

// api check

const apiCheckName = 'sendsecurely-api-check';

const sendSecurelyApiCheck = new datadog.SyntheticsTest(apiCheckName, {

name: apiCheckName,

status: 'live',

type: 'api',

subtype: 'multi',

apiSteps: [

{

assertions: [

{

operator: 'is',

target: '200',

type: 'statusCode',

},

],

name: 'Create secret',

requestDefinition: {

method: 'POST',

url: 'https://sendsecure.ly/api/secrets',

timeout: 5,

body: '{ "data": "Synthetic Testing secret!", "ttl": 30 }',

},

requestHeaders: {

'Content-Type': 'application/json',

},

subtype: 'http',

extractedValues: [

{

name: 'SECRET_ID',

type: 'http_body',

parser: {

type: 'json_path',

value: 'id',

},

},

],

},

{

assertions: [

{

operator: 'is',

target: '200',

type: 'statusCode',

},

],

name: 'Get secret details',

requestDefinition: {

method: 'GET',

url: 'https://sendsecure.ly/api/secrets//details',

timeout: 5,

},

requestHeaders: {

'Content-Type': 'application/json',

},

subtype: 'http',

},

{

assertions: [

{

operator: 'is',

target: '200',

type: 'statusCode',

},

],

name: 'Read secret',

requestDefinition: {

method: 'GET',

url: 'https://sendsecure.ly/api/secrets/',

timeout: 5,

},

requestHeaders: {

'Content-Type': 'application/json',

},

subtype: 'http',

},

],

…

});

Happy paths

At Basis Theory, we focus on writing Synthetic Tests along our happy paths. We want to stay away from sad paths and exhaustive tests to keep time and financial costs low—we leave these to Acceptance and Integration Tests.

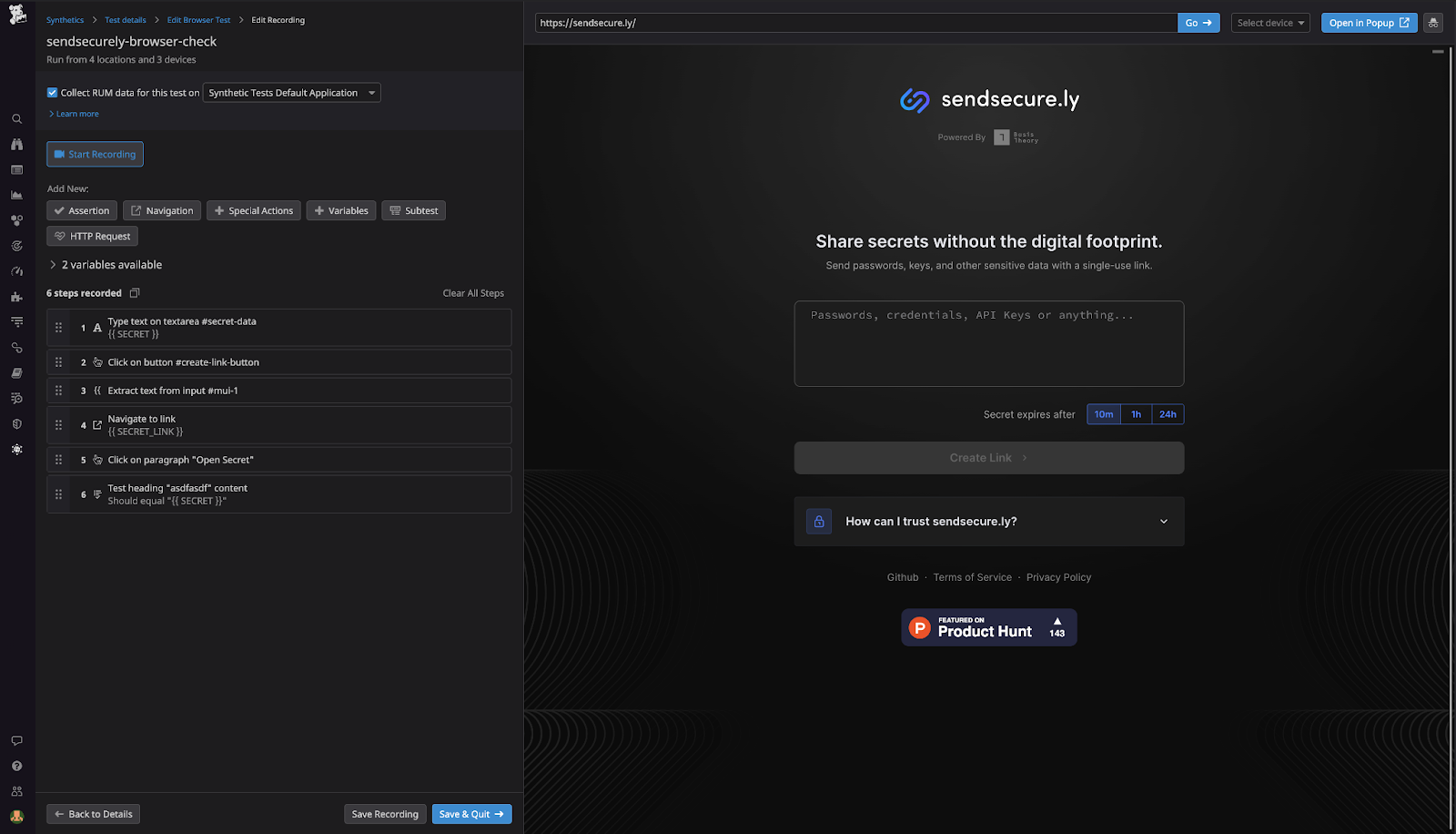

Browser tests

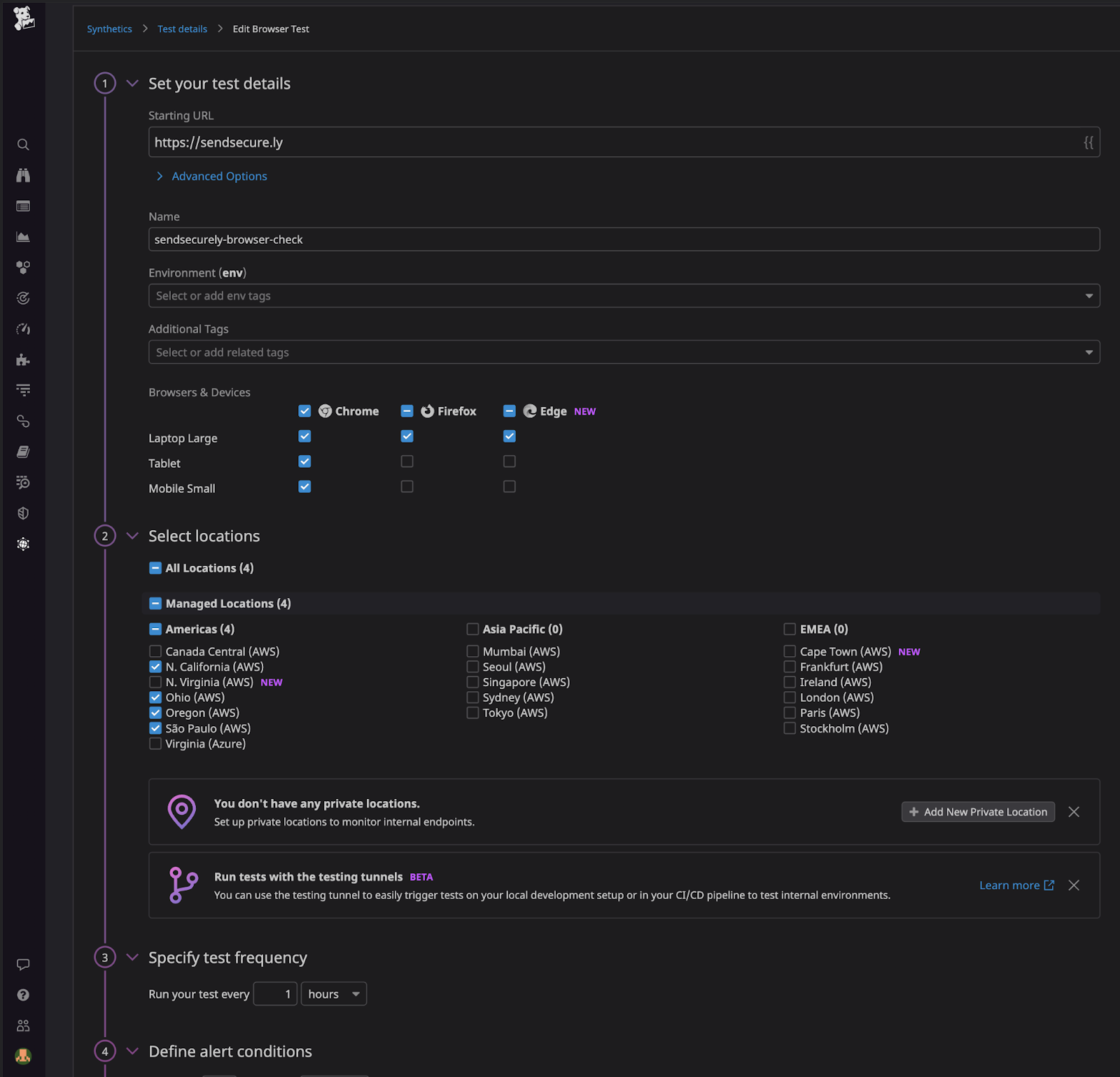

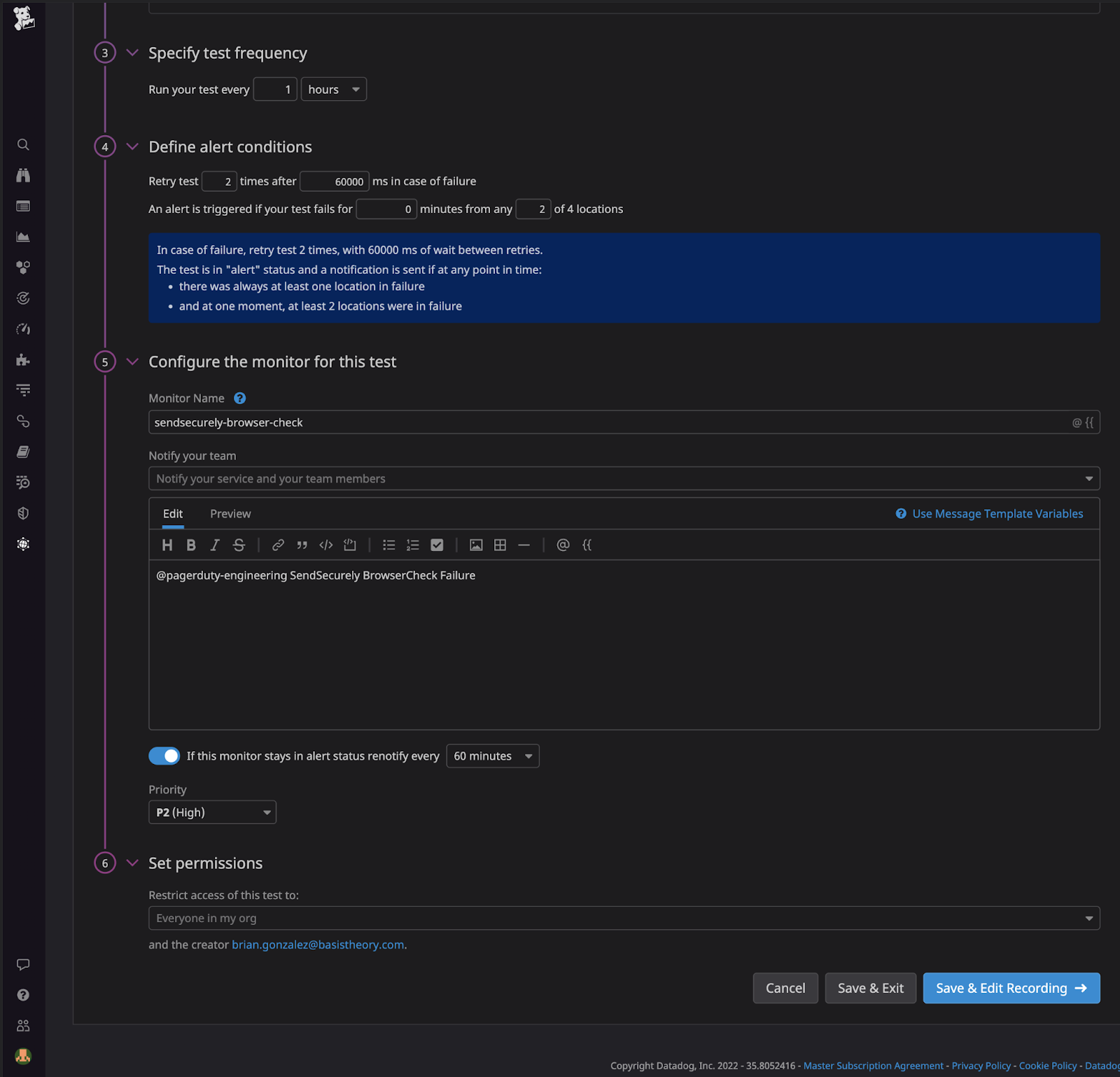

DataDog has more extensive support for browser tests through their website. The following are screenshots of the browser tests created to test sendsecure.ly.

As you can see, we have our browser tests running from the same locations as the other tests, and we’ve set these to run on Chrome, Firefox, and Edge browsers on various devices.

We retry this test at least two times with one minute in between failures and set up alerts with our PagerDuty integration to ensure that any problems notify our engineering team.

Using retries

After we investigate an outage, we’ll include some retries to prevent the team from getting alerts every single second. You don’t want to retry on actual failures that should be addressed.

At Basis Theory, we start with no retries, then add retries if we find that we’re getting blips in outages.

Alerting

There is an art to managing the priority and number of alerts Synthetic Tests initiate. Alerting too much will desensitize your responders that could lead to neglected alerts. This well-documented phenomenon, called alert (or alarm) fatigue, results in prolonged production issues.

We found that alerting less ensured our alerts had greater significance, but there is no immediate right or wrong answer to this. The only wrong answers lie in the extremes, and in not tuning alerts for your scenario.

In our final screenshot, we have our assertions for our browser test. These tests assert that we can create and read a secret through the UI, our main and most critical flow for this app. With this final test, we feel confident that our system is healthy and serving requests as expected in production.

Pulse check: Confidence level

The goal of any test is to add confidence that the code, application, or system behaves as expected. So far, we’ve covered Acceptance and Integration Tests, but how confident are we after our Synthetic Tests?

Are we confident that the application’s programmable interface does what we expect? Yes, the API Acceptance Tests provide this confidence by validating the system's behavior across various happy and sad-path scenarios.

Are we confident that the application can communicate with external dependencies? Yes, API Integration Tests provide confidence that our application can communicate and receive traffic from external dependencies in a deployed environment. E2E Integration tests cover the systems as a whole, replicating user behavior in a production-like environment.

Are we confident that the application’s user interface does what we expect? Yes. The UI Acceptance Tests give us confidence that the UI is behaving as expected.

Are we confident that the application is always available? Yes, we are! Synthetic Tests ensure that our application is healthy and available to serve requests.

Are we confident that the application can handle production throughput and beyond? Nope, not yet.

Are we confident that the application is free of security vulnerabilities? Not yet.

Are we confident that the application is secure against common attack vectors (e.g., OWASP)? Not yet.

Don't worry. By the end of this series, "Yes" will be the answer to all of the above questions. So stay tuned for our upcoming posts on the remaining testing layers, when to use them, and how they add confidence to our SDLC!

Have questions or feedback? Join the conversation in our Slack community!

.png?width=365&height=122&name=BTLogo%20(1).png)

.jpeg)