What to know About Tokenization

Historically, data security has been treated as featureless and burdensome—but a necessary expense incurred by organizations. Tokenization, however, has reframed the conversation, leading to an explosion in its usage. Today, we can tokenize anything from credit card primary account numbers (PAN) to one-time debit card transactions or social security numbers.

As a merchant, to understand tokenization for your own benefit, it’s critical to understand:

- What tokenization is, why it’s important for payments, and how it compares to encryption.

- How tokenization applies to being PCI compliant and meeting the 12 PCI DSS requirements.

- How developers can use a tokenization platform to secure protected data without the costs and liabilities of building their own system.

A token is a non-exploitable identifier that references sensitive data. Tokens can take any shape, are safe to expose, and are easy to integrate into payment or other workflows with sensitive data. Tokenization, therefore, refers to the process of creating a token and storing it within a secure vault.

The tokenization process is completed by a tokenization platform and, in its most simple form, operates sequentially:

- Sensitive data is entered into a tokenization platform.

- The tokenization platform securely stores the sensitive data.

- The platform provides a token for use in place of the sensitive data.

Because sensitive card data is replaced by a token and cardholder data is stored off your systems, the PCI compliance scope is greatly reduced. In practical terms, this means there are fewer PCI requirements you need to comply with within your self-administered systems, reducing cost and risk.

Comparing Payment Token Types

The terms “payment tokens” and “network tokens” have several key differences in utility and flexibility. Both are used to mask sensitive payment data, but they do this in different ways and via different partners.

Network tokens are distributed by the credit card networks, and can only be used through the issuing card networks or partner merchants.

Universal tokens or PSP tokens can be used in place of plaintext payment data for transactions.

Both offer enhanced security for storing and utilizing payment data, reducing overall fraud and risk levels.

What does a tokenization platform do?

Third-party tokenization platforms can be split into two functions: a token vault, then any connections and services.

- The token vault offers a secure and PCI-compliant location to store original data (e.g., credit card or social security numbers).

- The services allow merchants to collect, abstract, secure, and use tokens or variations of the underlying data in transaction workflows.

Combined, these two components provide organizations a way to collect, store, and use sensitive data—without assuming the ongoing costs, delays, and liabilities of securing it themselves in a PCI-compliant environment.

How are tokens used?

Instead of using the original sensitive data, developers and their applications use previously-generated tokens to execute traditional operations that sensitive data would provide, like performing analyses, generating documents, or customer verification.

To see how this works in practice, let’s take a look at three scenarios:

Example 1: Payment Tokenization

Merchants often find that they are primarily seeking a payment tokenization approach that offers a short implementation time and lower maintenance costs than network tokenization.

Three options exist for payment tokenization: delivery by card networks, PSPs, or third-party tokenization providers.

Example 2: Protecting PII

A company needs Personally Identifiable Information (PII) to generate and send tax documents for its employees. This company doesn’t want to go through the trouble and expense of securing its employees’ data within its own system, so it uses a tokenization platform for storing sensitive employee data. Employees provide their PII via a form hosted on a company’s website during onboarding.

Although the company hosts the website, the form uses an iframe, which captures and sends PII to the tokenization platform. Tokens are generated to represent the PII and sent back to the company for the team to use instead of the raw PII data. The company then uses the tokenization platform to process and generate the tax document with the necessary sensitive information, without worrying about compliance.

Example 3: Avoiding PCI compliance

A company collects credit card information from its customers to process payment for its e-commerce website. Similar to the example above, they don’t want to build a compliant system if it doesn’t give them a competitive advantage. Additionally, they don’t want to be locked into a specific payment processor. They opt for a tokenization platform to process payments with many payment processors.

When customers reach the point on the e-commerce site where they enter payment information, an iframe is used to send sensitive credit card information to the tokenization platform. Tokens representing the customer PCI information are sent back to the company. As soon as the customer takes action to purchase, the company makes requests with that customer’s tokens through a proxy that calls a payment processor to charge the customer’s payment method. This was all done seamlessly, without the company needing to comply with stringent PCI compliant policies.

Why use a tokenization platform?

The benefits of tokenization aren’t as pronounced if you choose to manage tokenization internally. This demands a substantial investment of time and resources for the initial build, not to mention creating the significant ongoing burden of securing and maintaining compliance for the entire lifecycle of the system.

The vast majority of companies that tokenize their sensitive data turn to third-party platforms. In other words, the big benefits of, and reasons for, tokenization rest within the platforms offering these services.

Flexible to Work With System Constraints

Since tokens simply reference underlying data, they can look like anything. Tokens can be a set of random characters or look like the sensitive data they reference. This “format preserving” capability makes tokens easy to validate, identify, and store, especially with existing systems.

For example, when storing a zip code that looks like 492914, in a tokenization platform, you may get a token of 692914, in which, the token preserves the last 4 digits of the zip code.

This is called aliasing. Tokens formatted this way may also be easier to store since they follow the patterns of the data they hide.

Easy to Integrate with Other Systems

Depending on the tokenization platform, tokenized data is easy to integrate with other systems. Let’s say you use a tokenization platform that offers an outbound proxy—a server that makes requests on your behalf—that trades a token for the sensitive data before going to its final destination. This provides a straightforward avenue to send sensitive data to another system without ever taking on any risk yourself.

Safe to Expose

Tokens are safe to expose because they are not derived from the data they reference. In fact, tokens are not required to have anything in common with the data they reference. Because of this, tokens are completely un-exploitable, meaning they’re irreversible without accessing the tokenization platform. You can be at ease passing your token around because tokens themselves can’t be hacked.

Minimize or Eliminate Compliance Requirements

While necessary, compliance, particularly, the 12 PCI DSS requirements, are a significant burden for organizations to bear.

Completing audits and other non-value-generating work consumes resources, timelines, and funds. The alphabet soup of private and public data regulations, like PCI, GDPR, HIPAA, and more, endorse the use of tokenization and specialized tokenization platform providers to help organizations de-scope their compliance requirements and secure their data.

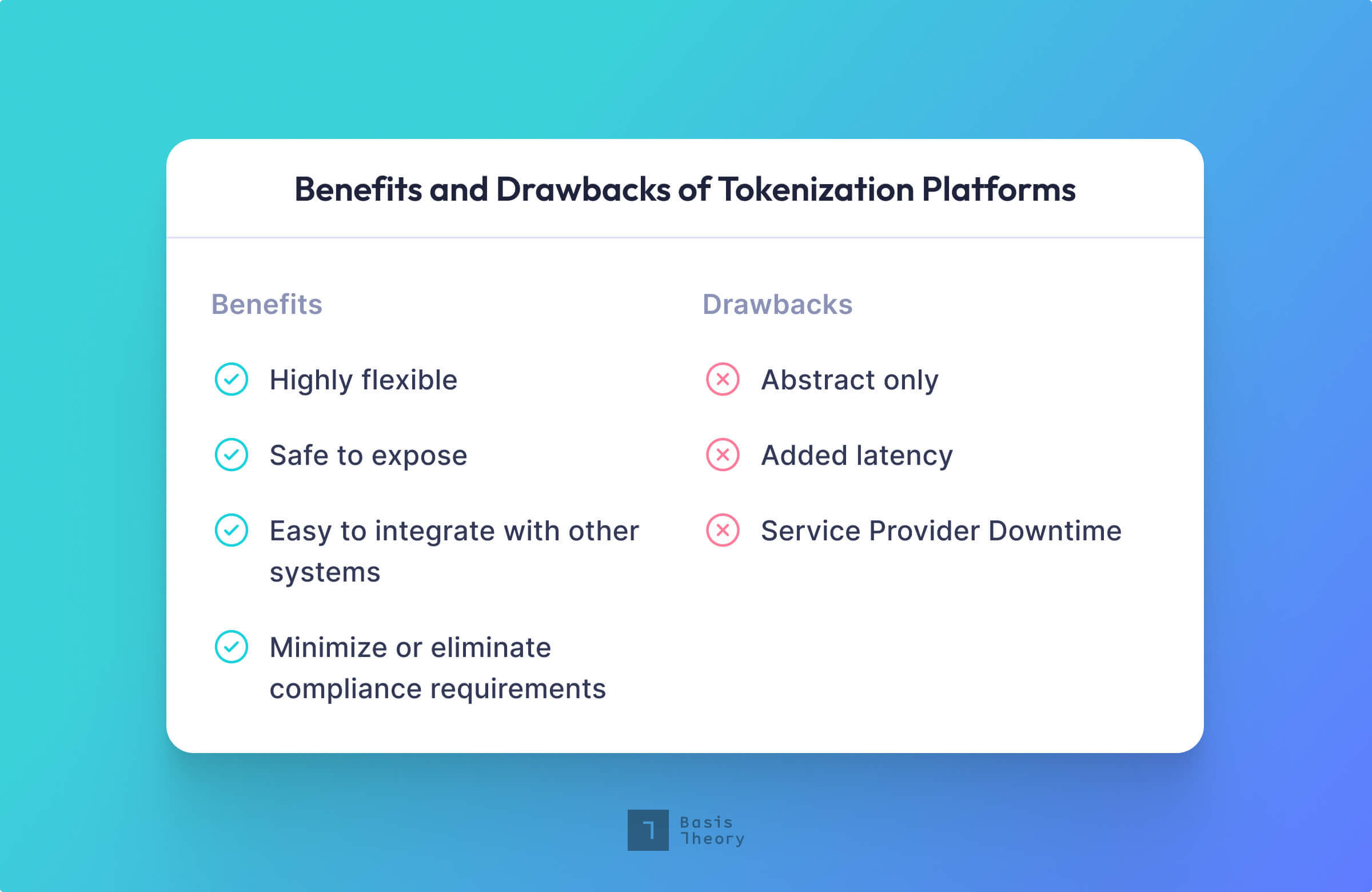

Working with a Tokenization Platform

There’s no such thing as a perfect solution when it comes to security, but acknowledging challenges helps us weigh the pros and cons of any solution.

Abstract Only

By most standards, storing sensitive data and returning an identifier isn’t enough to properly restrict access to the stored data. At the very least, tokenization platforms should use an authentication mechanism, like OAuth, to restrict who has access to this data. As an additional layer of protection, these systems may encrypt the sensitive data so that it’s protected against unauthorized access.

An extra step must be taken in order to ensure that the stored sensitive data is safeguarded. Fortunately, most tokenization platforms offer authentication, encryption, and permissioning features to make sure your data is safe.

Latency

For tokenized data to be usable, a request must be made to the tokenization platform to retrieve the underlying data. This means that latency will be added to retrieve sensitive information, which could negatively impact the user experience. While this increase in latency is negligible in most cases, tokenization may not be ideal if your system requires an immediate response.

Fortunately, the impact of latency can be addressed through geo-replication, horizontal and vertical scaling of resources, concurrency, and caching.

Service Provider Downtime

Like all popular cloud providers, systems must be available to serve legitimate requests. Any service used for tokenization must scale to meet the demand of its clients. For seamless workflows, these services should have safeguards in place to protect against spikes in traffic and outages. Anyone considering tokenization must consider this extra dependency.

Prudent systems address downtime through redundancies, self-healing operations, heartbeats and pings, synthetic tests, and 24/7 support.

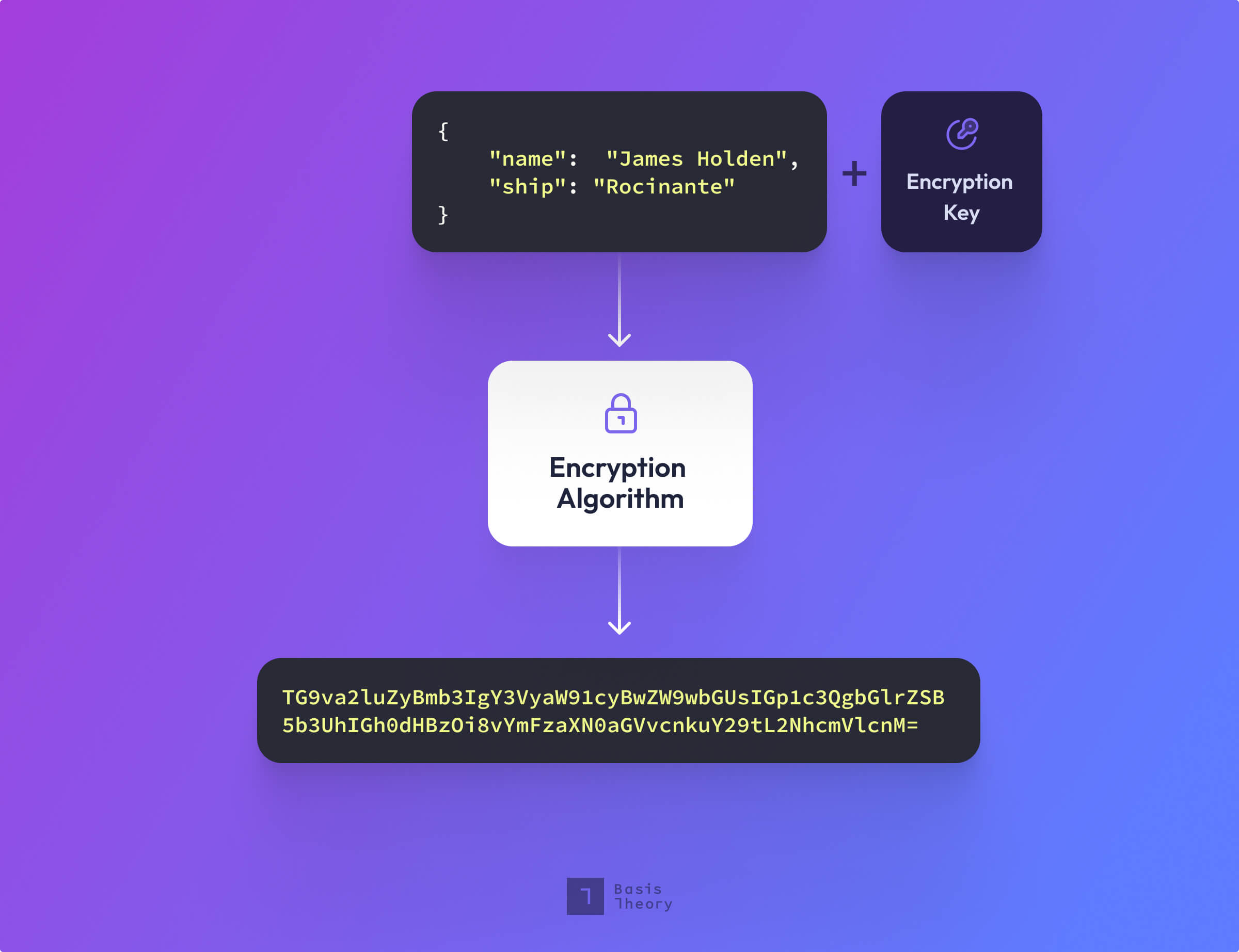

Encryption vs Tokenization

Encryption converts plaintext data into an unrecognizable string of numbers, letters, and symbols—also known as ciphertext. The ciphertext is a computed value, based on a key and plaintext data. To retrieve the original sensitive data within the ciphertext, you’ll need to use a key capable of converting the ciphertext data back into plaintext data.

Encryption and tokenization are more different than they are similar. We found that contrasting the two would be more valuable in describing each method and in deciding which to use in what situations.

Format

As discussed earlier, tokens can take any shape, making them more readable and shareable to both machines and humans. They can be validated and identified without risking exploitation. This is significantly different from encryption, where the resulting ciphertext is something that’s generally not within your control. This means organizations introducing AES-256 will need to ensure their systems, applications, and databases account for ciphertext lengths that may exceed, for example, the original 16-digit card number.

Vulnerability

Encrypted data is created by performing operations on the sensitive data. In some cases, if an attacker is given enough data about the encryption algorithm and the environment in which it was run, the ciphertext can be reversed to plaintext data without a key; while newer encryption algorithms introduce enough sophistication to make such attacks expensive and time-consuming, continued advances in computing power and decryption technology result in a never-ending game of cat and mouse. Tokens, on the other hand, do not depend on the sensitive data to be created. This means tokens generally do not have anything in common with the data they conceal.

Authentication and Authorization

With encryption, the key used to decrypt the data is how you authorize and retrieve the data. And in most cases, you only have one key to use with the ciphertext. Consequently, to revoke access to ciphertext you must use a new key to create a new ciphertext. This can be inconvenient and unwieldy.

With tokenization, there may be a multitude of ways to authenticate and authorize depending on the tokenization platform. API keys, PINs, passphrases, certificates, username and password—the list goes on of all the ways you’re able to verify your identity. The great thing about this is that your permissions may be entirely separate and different from someone who you’ve allowed access to your tokens. You’re able to manage your access independent of someone else’s.

Prevalence

Encryption is ubiquitous. You can find an implementation for encryption algorithms in just about every platform you find. Encryption has been around for a long time, has many standards, and has solidified itself as the de facto tool to secure your data. Tokenization, by contrast, has become a core component of some vital processes, especially in the payment industry, while it is continuing to gain a solid foothold in other settings. There are more and more platforms and integrations with different technologies that we’re seeing every day. Soon we may see standards for tokenization in everyday use.

Independence

Encryption scrambles a raw value while tokenization generates a net new value. While both can be stored within a system, encrypted values carry the raw data with them wherever they go, whereas tokens reference the encrypted values held elsewhere. By keeping an encrypted value secured in a specialized database and using a token in your systems instead, you essentially decouple the raw data's risk from its utility. This independence is one of the big reasons developers and CISOs alike prefer tokens.

Do I encrypt or tokenize?

Choosing between encryption and tokenization often comes down to a question of how often the data needs to be accessed.

Encryption is best used when a smaller number of systems need access to the concealed data. In order to make ciphertext meaningful to other systems, decryption keys have to be retrievable by those systems. If you're not already using an established protocol, safely making those keys available to other systems can be difficult. And leaked keys are often culprits in data breach attempts, as Samsung discovered when criminals used keys to leak company source code, or as NordVPN experienced when attackers gained root access to its VPN servers via its encryption keys. Encryption is an excellent option for when trusted actors need access to sensitive data.

Tokenization, on the other hand, is best when a store of sensitive data is utilized by many actors. These systems can offer forward and reverse proxies, and direct integrations. This makes sending sensitive data seamless and secure. Additionally, tokenization platforms let administrators easily retain control by making it straightforward to revoke or grant access to the underlying data. Tokenization is also ideal when you need to share sensitive data, and for simpler workflows across various systems.

At Basis Theory, we encrypt and tokenize data, as well as offer APIs to administer the back-end processes (like key management and access policies) needed to govern both.

Tokens and tokenization use cases are in their infancy, but the next evolution of tokens goes beyond serving as a simple reference to the raw data. For example, Basis Theory's tokens allow users to dynamically configure multiple properties with a single token, allowing developers to tailor the permissions, masks, and preserve their format and length. Today's tokens can be searched, fingerprinted, and tagged, and with services, like Proxy, they can be shared with any third-party endpoint.

Register for a free account and check out our Getting Started Guides to begin testing a tokenization platform.

.png?width=365&height=122&name=BTLogo%20(1).png)